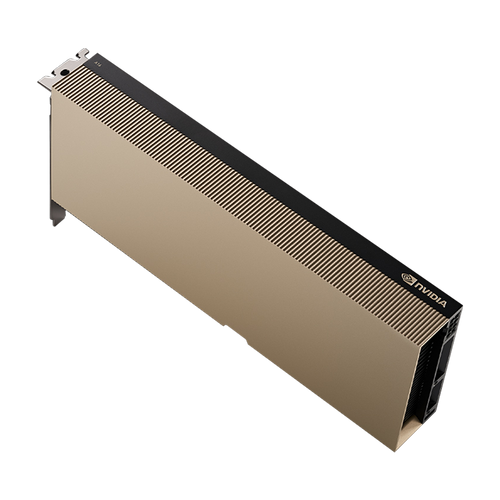

Introducing the NVIDIA H100 Tensor Core GPU - the most advanced chip ever built - now available for purchase at our online store! This GPU is designed to take an order-of-magnitude leap in accelerated computing, delivering unparalleled performance, scalability, and security for every workload.

Built with 80 billion transistors using a cutting-edge TSMC 4nm process, the H100 features major advances in the NVIDIA Hopper™ architecture. With fourth-generation Tensor Cores and the Transformer Engine with FP8 precision, it extends NVIDIA's market-leading AI leadership with up to 9x faster training and a remarkable 30x inference speedup on large language models. For HPC applications, the H100 triples the floating point operations per second (FLOPS) of FP64, adding dynamic programming (DPX) instructions to deliver up to 7x higher performance.

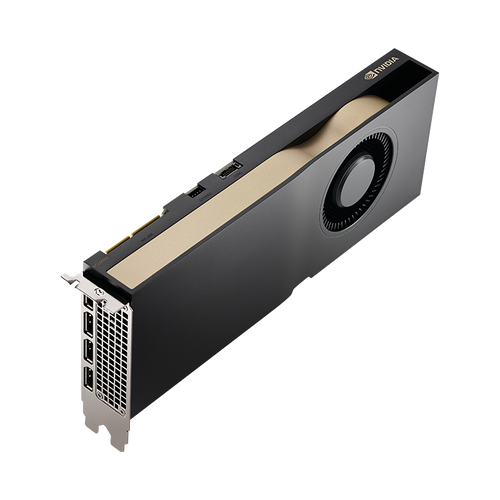

With NVIDIA® NVLink®, two H100 PCIe GPUs can be connected to accelerate demanding compute workloads, while the dedicated Transformer Engine supports large parameter language models. The H100 is ideal for conversational AI applications, delivering up to 30x speedup on large language models over the previous generation.

For mainstream servers, the H100 Tensor Core GPU comes with a five-year software subscription, including enterprise support, to the NVIDIA AI Enterprise software suite. This simplifies AI adoption with the highest performance, ensuring organizations have access to the AI frameworks and tools they need to build H100-accelerated AI workflows such as AI chatbots, recommendation engines, vision AI, and more.

The H100 is an integral part of the NVIDIA data center platform, built for AI, HPC, and data analytics. It can accelerate over 3,000 applications and is available everywhere from data center to edge, delivering both dramatic performance gains and cost-saving opportunities.

With a memory capacity of 80GB HBM3 and a memory bandwidth of 2TB/s, the H100 Tensor Core GPU is a must-have for high-performance computing. The NVLink Switch System enables the scaling of dual H100 input/output (IO) at 600 gigabytes per second (GB/s) bidirectional per GPU, over 7x the bandwidth of PCIe Gen5, and also delivers 9x higher bandwidth than InfiniBand HDR on the NVIDIA Ampere architecture.

Deploy H100 with the NVIDIA AI Platform, the end-to-end open platform for production AI built on NVIDIA H100 GPUs. It includes NVIDIA accelerated computing infrastructure, a software stack for infrastructure optimization and AI development and deployment, and application workflows to speed time to market.

Upgrade your data center with the NVIDIA H100 Tensor Core GPU and take advantage of its unprecedented performance, scalability, and security.